In her seminal book, Leadership and the New Science, Margaret Wheatley takes a stance regarding the kind of leadership that is required in today’s world. She urges readers to stop clinging to the limits of “Newtonian” science and embrace the science of systems and complexity. Wheatley writes,

“…. The science has changed. If we are to continue to draw from science to create and manage organizations, to design research, and to formulate ideas … then we need to at least ground our work in the science of our times. We need to search for the principles of organization to include what is presently known about how the universe organizes.” (p.8)

Collective impact is a living example of “new science” in action. The concept arises from the notion that systemic problems require systemic solutions; that “business as usual” doesn’t cut it any longer; that throwing money at stand-alone programs is not the way to gain traction on long-standing social problems. In his plenary speech at the recent convening – Catalyzing Large Scale Change: The Funder’s Role in Collective Impact – FSG’s John Kania urged the audience to abandon traditional conceptions of how problems are solved and “embrace emergence” when it comes to collective impact.

As collective impact has become more popular, however, a lingering question has arisen in its wake: How in the world do we evaluate collective impact?

After pondering this question for the past several months, my colleagues have produced an excellent 3-part guide to evaluating collective impact. The guide lays out an overall framework, as well as examples of outcomes and indicators at different stages of a collective impact effort. However, anyone who seeks to use the guide as a recipe would be missing the point entirely.

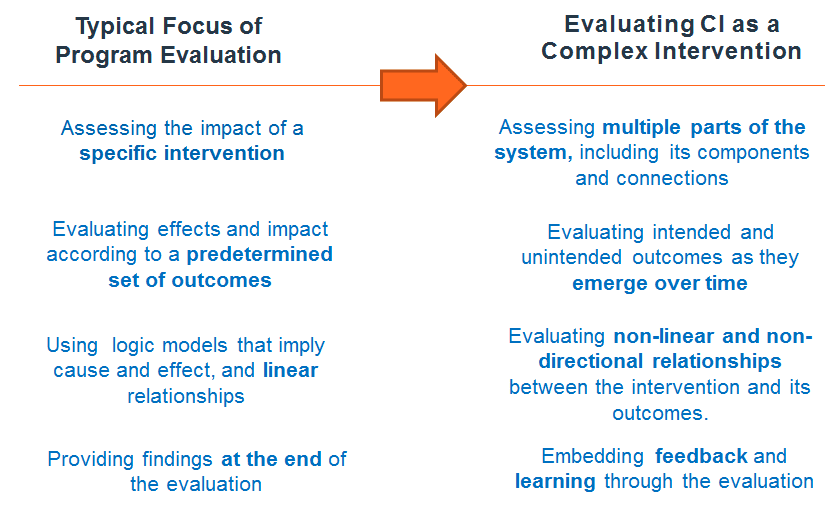

This is because evaluating collective impact as a complex intervention requires the same kind of “paradigm shift” as the notion of collective impact itself. It requires a change in how one views the role and nature of evaluations. It involves understanding the new science of complexity, uncertainty, and emergence as applied to evaluation, and taking a systems approach to evaluation, just as one would take to solving chronic social problems. For instance, the new science of evaluation recognizes that context and connections matter, that non-linearity is the name of the game, and that unintended consequences are equally, if not more, important than expected outcomes. Feedback and ongoing learning become the “fuel” that runs the evaluation engine.

The table above captures ways in which evaluating collective impact differs from traditional program evaluations. We are in the early stages of expanding on these ideas to produce a guide that will lay out a series of propositions for how one can effectively evaluate complexity. As we investigate these ideas further, we would love to hear from you –

In what ways do you see evaluating collective impact embracing the new science?