What images come to mind when you hear the word evaluation? A teacher grading an exam? A dashboard or scorecard with outcomes highlighted in green, yellow, or red to show progress? A logic model mapping a program intervention to its intended outcomes? When asked about evaluation, fewer people tend to conjure images of a room full of people reflecting on and discussing data together. Or think of evaluation spurring an energizing collaborative conversation to visualize what a program hopes to achieve, and the assumptions and external factors that can help or hinder success.

The predominant mental model around evaluation does not account for the variety of evaluation purposes and approaches. And it doesn’t highlight the role of evaluation in strengthening collaboration, solving problems, or informing strategic decision-making.

In fact, there are many different ways that organizations get information to make better strategic decisions. Considering the various options around inquiry can help break through people’s existing mental models of what evaluation is—or is not.

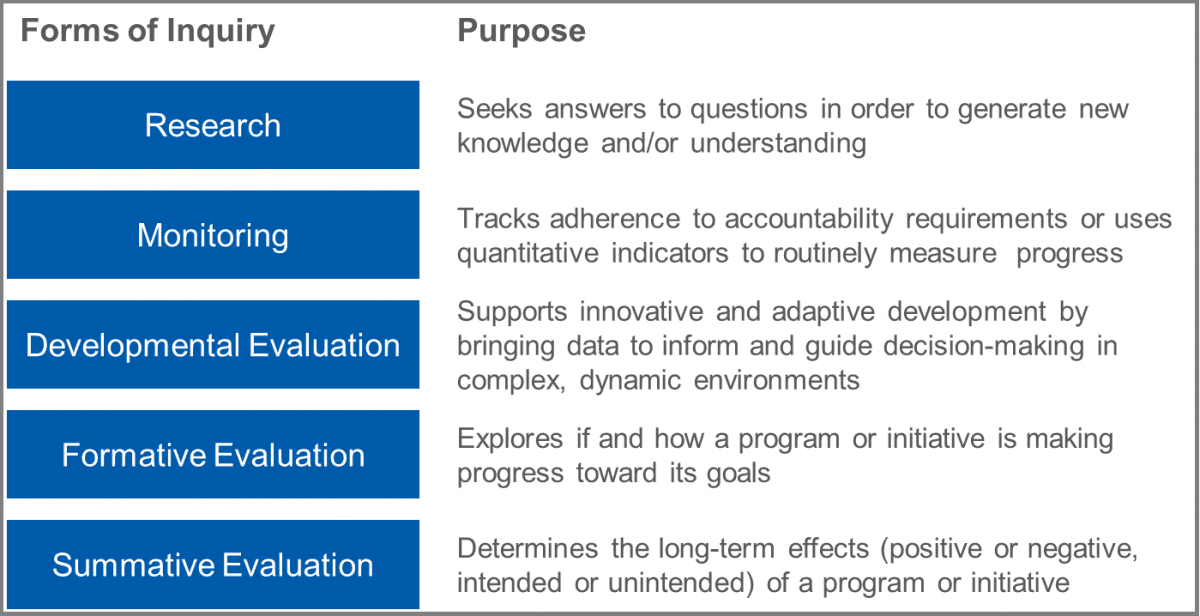

The graphic below briefly explains the key differences among the most common types of inquiry.

Here are a few examples from FSG’s work:

- Research: With support from the W.K. Kellogg Foundation, FSG produced the Markers that Matter report that compiles a set of 48 early childhood indicators that reflect healthy development. The guiding question for this research study was: “What are the indicators that guide healthy development of young children?”

- Monitoring: With support from the Staten Island Foundation, FSG worked with multiple organizations across sectors to develop detailed, evidence-informed strategies and related performance measures for the Tackling Youth Substance Abuse Initiative. A guiding question for developing the shared measurement system for this collective impact effort was: “What indicators should we use to track progress toward our goal?”

- Developmental Evaluation: At the start of its new college access initiative, Grand Rapids Community Foundation hired FSG to conduct a developmental evaluation to assess how well the Challenge Scholars initiative was evolving as it launched and expanded into new schools. A guiding question during the developmental phase of the evaluation was: “How is the Challenge Scholars initiative taking shape?”

- Formative Evaluation: The California Endowment commissioned FSG to conduct a strategic review (formative evaluation) 3 years into its 10-year Building Healthy Communities place-based and statewide strategy. One of several guiding question for the formative evaluation was: “What is helping or hindering progress toward the foundation’s goals?”

- Summative Evaluation: The Robert Wood Johnson Foundation commissioned FSG to conduct a retrospective assessment (summative evaluation) of the foundation’s influence and impact on substance-use prevention and treatment policy and practice over 2 decades of investment. A guiding question for the evaluation was: “What impact did the foundation have on changes in substance-use prevention and treatment policies and practice?”

As you are trying to choose among these various options, there are 4 questions that can guide you to the appropriate approach:

- What is the purpose of the inquiry?

- Who will use the data and information and in what ways?

- What question(s) do you want to answer?

- At what point in the program or initiative’s lifecycle will the inquiry occur (planning, implementation, after its conclusion)?

I hope you find this taxonomy helpful in your own work. If you have additional examples or ways of explaining these terms, please share in the comments section below.

Learn more about FSG’s Strategic Learning and Evaluation work >